3D Flappy Bird Neuro Evolution

This was a lot of fun. After making several NeuroEvolution

'games' I thought I pretty much knew all there was to know. But this

taught me a few new things, and not just about the 3D programming,

but about how the Neural Network agent behaves when adding in the

3rd dimension.

I have 3 versions (well, 4 if you include the human playable

version).

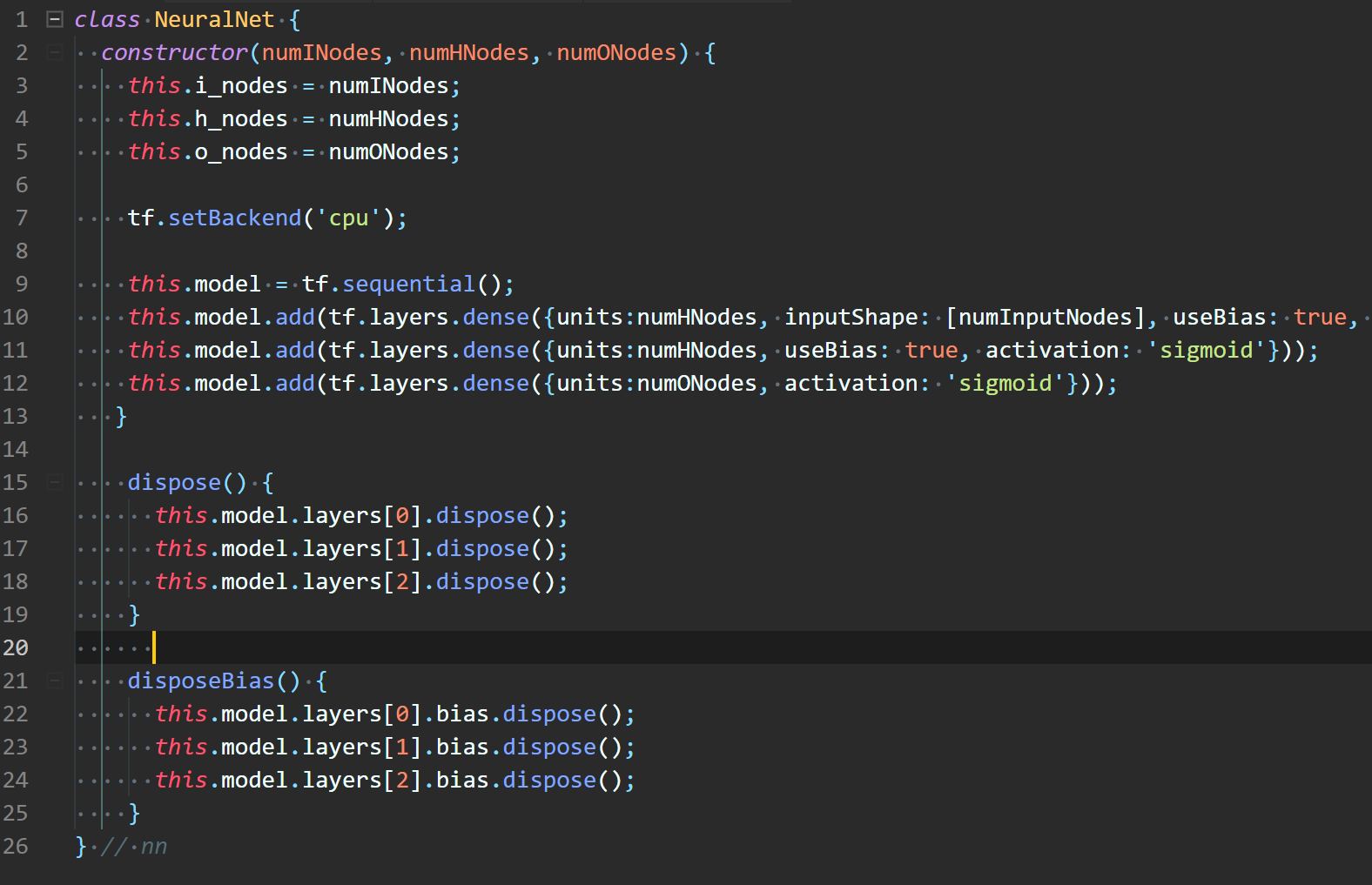

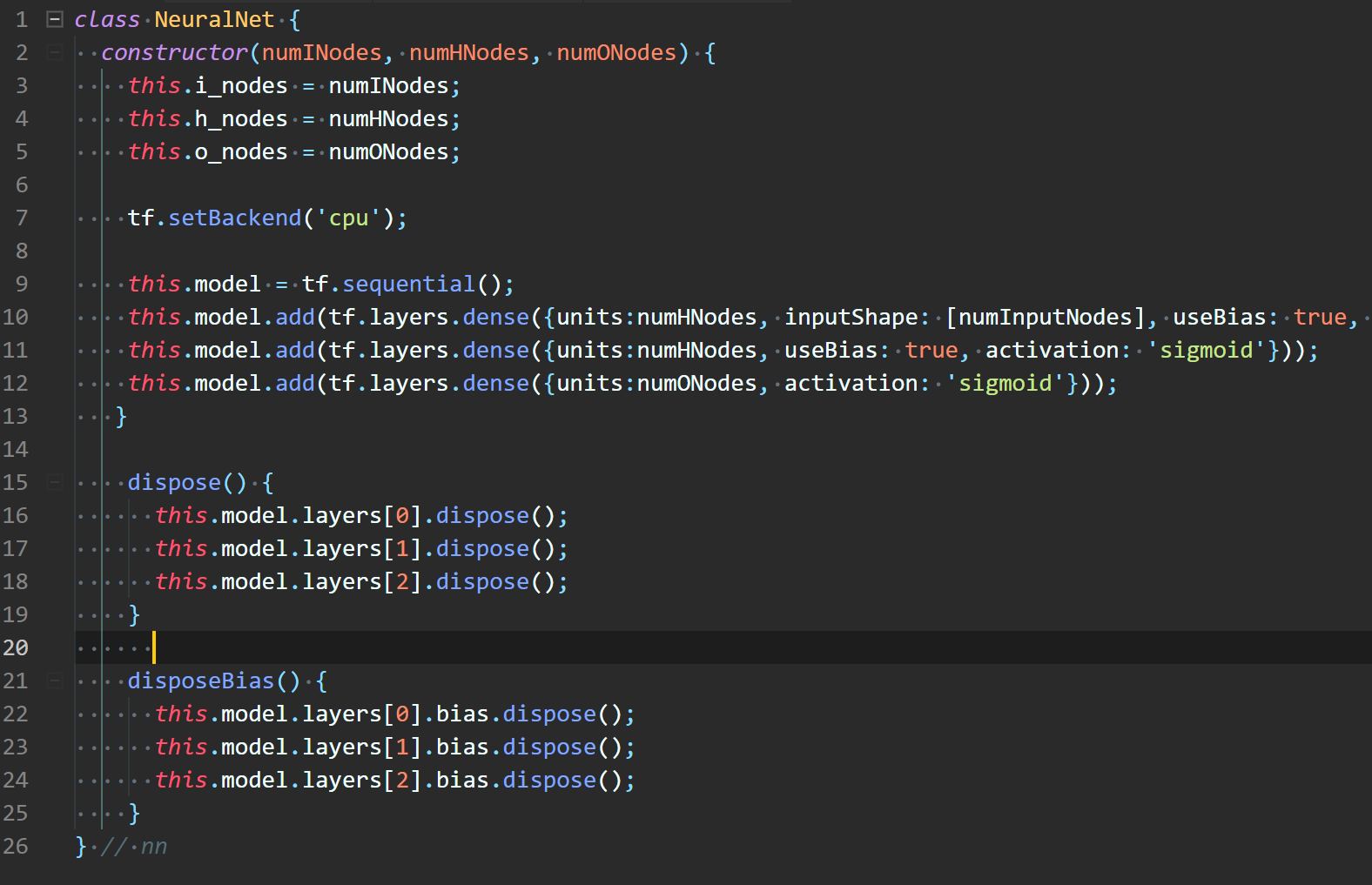

1) Neural Network is coded without using a

library as a set of matrices and a lot of linear algebra to

calculate the outputs.

2) Is like #1, but using the TensorFlow

library to create Tensors and their built in methods to calculate

the output (but not explicitly creating a NeuralNetwork Model).

3) Uses TensorFlow.js' Layers API to create

a model and return the output.

links to all the code are below:

(note: The code linked below is all hosted on P5JS's web

editor. P5JS is an excellent javascript library that makes

developing anything in javascript much simpler. If you are

not aware of Coding Train, do yourself a favor and watch Dan

Shiffman's Coding Train on youtube. Also his book, Nature of

Code, is an amazing way to learn how to program.)

link: Coding Train on

YouTube.

Analysis:

Note: (below when I say 'Model code' I mean using the

Layers API to create a model, and when I say 'Tensor code' I mean

create Tensors for the weights and use the Tensor methods to

perform the linear algebra, but not explicitly creating a Model).

Performance between programs:

Oddly enough, this kind of flew in the face of what I

learned previously; that the TensorFlow model had too much overhead

to run effectively in the browser. Using the WEBGL renderer

seems to make a huge difference in performance. However, I

still have to use a restricted population size in the code with the

TensorFlow model. The TF model can handle a pop size of around

300 and still be smooth and fast enough to run. With that

population size it can 'solve' the game in around 25-30 generations.

But in the plain code, with no TF, a pop size of 500-750 is easily

usable, and even 900 runs acceptably. However I found that the

code that doesn't use any of the TF library functions performs more

erratically. What I mean by that is that the program will

'solve' the game sometimes in less than 10 generations and other

times takes around 40 generation.

The Model code and the Tensor code generally 'solve' the game in

reliably around 25 generations.

Combating Memory Loss:

This type of Neural Network in general can suffer from memory

loss. That is, if the environment changes in such a way that

the top performer suddenly dies off, all of it's learned traits can

be lost. An agent could have nearly solved an environment, but

if a small change causes it to die off early, the process of natural

selection can bypass that top performer, and all it's desirable

behavioral traits can be lost, basically resetting the whole

scenario back to the beginning.

A way I used to combat this memory loss is to save the top performer

from each generation for 5 generations (easily configurable to save

X generations). That is, the top performer of a generation

will be ran for at least the next 4 generations before being

lost. Therefore a fluke loss in one or two generations will

not reset the whole scenario back to square one.

How did this project differ from the normal 2D

ones (car track, dino game, 2d flappy bird, etc)?

The one Big Takeaway I got from this project is that

this type of Neural Network does not like negative numbers as

inputs.

In this case, the agent's inputs are 1) it's delta X distance to the

target, 2) the delta Y distance and 3) the delta Z.

note: (I never use the actual position of the agent as an

input. This leads the network to 'memorize' the layout of

the environment instead of reacting to the situations in the

environment).

I thought that this would suffice, that the network would be

able to figure out that a negative delta X would mean it has to go

right to get the delta to near 0, and a positive delta X would mean

go left until the delta was near 0. But this didn't

work. It was never able to figure out how to go right, it

didn't like the negative input.

So I created 2 new boolean inputs, one would be on if the delta X

was positive and therefore the agent should move right, and the

other would be on if the delta X was negative.

I then made the actual delta X input to always be positive by using

the absolute value of the input.

Once I did that the program solved the game in 25 generations and

would reliably solve it every attempt.